Preface (2003)

I wrote this paper for, and presented it at, the seminar AI, Autonomous User Agents and CSCW, held at the Department for Trade and Industry, in London, June 1992. AI is Artificial Intelligence; and CSCW is Computer-Supported Co-operative Work, which was at the time a hot topic in academia and the fringes of industrial R&D (in this era the term 'groupware' had been around for a bit, but the Internet was still mostly restricted to research institutions and the World Wide Web was only just being invented).

The paper was published in Ernest Edmonds and John Connolly (editors) CSCW and Artificial Intelligence, London, Springer-Verlag (1994) — but this is impossible to find outside research libraries now, so I'm reproducing it here. The references to 'this volume' in the text refer to that book.

I'm not expecting anyone to read the full paper on screen: I invite you to use the print facility of your choice to get a paper copy. You're also more than welcome to comment on this paper here.

David Jennings

November 2003

On the Definition and Desirability of Autonomous User Agents in CSCW

It has been said by Alan Kay that user interfaces of the 1990s will not be tool-based as in the 1980s, but will instead be agent-based. How intelligent will these agents be? How will they be displayed? How will they support group working?

(from the publicity sheet for the seminar on "AI, Autonomous User Agents and CSCW")

Abstract

This chapter is principally concerned with User Agents as they might appear to users of CSCW systems: that is, with interface agents rather than software agents. Examples are given to show the difficulty of establishing a clear perspective on interface agents from the user-centred point of view. This difficulty is related to the differing conceptions of agents held within the computer- and social-sciences, and it is argued that this makes User Agents a poor starting point for design of CSCW systems. As an alternative, it is suggested that CSCW design should start from the task domains, stages and functions of groupwork, and that the role of agents should then be situated within this framework. Some initial steps towards developing this approach are sketched.

Introduction

In this chapter I address issues of how Autonomous User Agents (AUAs) might be displayed to users of CSCW systems. Thus I am more interested in interface agents than in software agents. Also my perspective is mainly taken from the social sciences, rather than the computer and cognitive sciences (which are the domains of the designers of software agents). At the risk of opening myself to criticism of armchair philosophising and possibly of trouble-making, I want to look at some conceptual and terminological issues to do with what might count as Autonomous User Agents. My aim in doing this is to clarify some of the domains where AUAs might and might not be a suitable basis for CSCW. I am also interested in adding a reflexive twist to this debate by looking at how computer/cognitive scientists can cooperate with social scientists on the definition and development of technologies like AUAs for CSCW. (Readers are also referred to the chapters by Smyth and by Benyon in this volume for discussion relevant to the present chapter.)

For the software designers of CSCW systems, the question of what constitutes an agent is usually fairly clear. An agent is part of the architecture of the system, with certain lines of code embodying it note 1. For users the question is not so cut and dried, however, for outside the world of code users perceive many beings functioning as agents of some sort, including humans as well as other animate and inanimate entities. I illustrate this with three examples of interfaces and agents that are not specific to CSCW.

Consider first the common example of a software application reminding or advising users that they are about to make an irreversible step, such as quitting without saving. This reminding function is the sort of task an interface agent could do: a little face could pop-up and say, "If you quit now, you'll lose any changes you made — do you want to ignore the changes, or would you like me to save them for you?" etc. This is not a style that many applications have chosen. But it would be functionally equivalent, and either way the system appears to have some agency, whether or not there is an interface agent per se. The interface agent could be no more than a graphical representation (as in a video game), so it might not be a "proper" software agent. My point is that the use of interface agents is a primarily stylistic one that we can separate from the use of software agents in the system architecture. While a software agent might be used to parse dates, for example (McGregor, Renfrew and MacLeod, this volume), it is unlikely that this function would be presented on the interface as an agent.

A typical expert system exhibits the features of agency and autonomy to varying degrees (in the sense that the users' inputs do not fully determine its outputs). However, if it is used in circumstances where users have discretion over whether to use the system, and whether or not to accept its "opinion" when they do use it, then that system is being treated by its users more as a tool than as an agent.

The final example is from an advertisement that caught my eye. In part it runs, "The new 1.1 version virtually installs itself... The new Intelligent User Assist automatically senses the communications adapter in your PC...[and then] pre-configures the software to suit" note 2. This reminded me of Norman's discussion of the installation of film in a projector in The Design of Everyday Things (1988). His argument there was that the task of working out which part of the projector to feed the film through placed too much strain on users, and that the solution to this was to internalise the function of film feeding in the design of the artefact, as has been done in the case of the video-cassette. As far as users are concerned this is an analogous solution to that achieved by the Intelligent User Assist. But we don't think of the housing of a video-cassette as an intelligent assistant! The solution only breaks down when the tape winds itself round the internal mechanism, or the software fails to install correctly. If this happens, users may be in a worse situation when the function is internalised than when it has to be done manually...

The point of this comparison is to demonstrate that agency as a quality or entity does not necessarily inhere in an artefact or system, but may be attributed by socio-cultural convention or individual choice. Woolgar (1985, 1989) has offered some suggestive accounts of the ways in which this attribution can take place. And he has proposed, on the basis of some ethnographic work, that representation technologies may undergo similar routines of socialization as people so that they may become trusted "agents of representation". In the CSCW context, this raises the question of whether AUAs are born or made. In other words, is the degree to which any interface is agent-based primarily a designers' issue or a users' issue?

Incorporating the Differing Viewpoints of Social- and Computer-Scientists

By now it may seem that once-simple terms have been clouded over with obfuscation and slippery usage! I have suggested that this confusion is more acute when we look at using agent-based systems than when we are designing them. Nevertheless, the existence of any confusion has implications for design practice. I shall characterise this confusion as being drawn up along the lines of social versus computer science. I shall then use some ideas developed by a social scientist (Leigh Star) in the context of distributed artificial intelligence (DAI) development. These ideas are pertinent because they address the questions:

How can two entities (or objects or nodes) with two different and irreconcilable epistemologies cooperate? If understanding is necessary for cooperation, as is widely stated in the DAI literature, what is the nature of an understanding that can cooperate across viewpoints?

(Star, 1989, p.42)

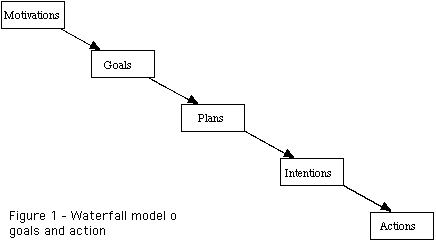

I want to characterize the computer/cognitive science model of autonomy and agency in terms of two main features. The first, which I call the "waterfall model" of intention and action, is encapsulated in the following passage and in Figure 1:

The autonomy assumption is important because I want to characterize actions as being "caused" in the following sense.... Motivations give rise to goals. Goals give rise to plans. Plans give rise to (among other things) intentions. Intentions give rise to actions. Some actions lead to effects in the physical world. It is the embodiment of the causal chain, particularly from goals to physical actions, which I will use as my definition of agency.

(Storrs, 1989, p.324)

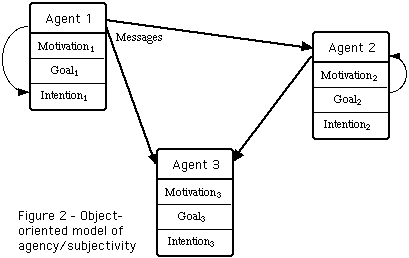

The second I call the "object-oriented model" of subjectivity and communication. This holds that agents are pre-given or pre-defined entities, and that the passing of messages between them may draw on or change their features in some way but leaves their fundamental nature unaltered and unquestioned. This model is represented in Figure 2.

These models are clearly applicable to software agents. By contrast, there is a school of thought in the social sciences — from Vygotsky and Heidegger through to Lacan and Foucault — that suggests that language, or "message passing", is the very fabric out of which our human subjectivity, our agency and our relative autonomy are woven. Our intentions and actions do not have meaning in and of themselves, but only through their reception and interpretation in the social, intersubjective realm (for attempts to work through some of the implications of this school for AI and Human-Computer Interaction see Bateman, 1985, Jennings, 1991). This makes the problem of user modelling, on which the intelligent interface approach is based, considerably less tractable.

This account sketches briefly what is in fact a wide and rich variety of models. But if it gives an indication of the range of approaches, how can we reconcile such apparently incongruent perspectives? On the basis of on studies of scientists from different backgrounds, Star (1989) suggests that successful cooperation can take place between parties who have different goals, employ different units of analysis, and who have poor models of each other's work.

They do so by creating objects that serve much the same function as a blackboard in a DAI system. I call these boundary objects, and they are a major method of solving heterogeneous problems. Boundary objects are objects that are both plastic enough to adapt to the local needs and constraints of the several parties employing them, yet robust enough to maintain a common identity across sites. They are weakly structured in common use, and become strongly structured in individual-site use.

(Star, 1989, p.46)

Star clearly hopes that the boundary object concept will itself become a boundary object to help social scientists and DAI developers cooperate successfully, and I share this hope. But what other boundary objects can we find to assist cooperation in the definition and development of CSCW and perhaps AUAs?

Unfortunately I do not think that the agent concept can fulfil the criteria for a useful boundary object and become part of the exchange currency between social and computer scientists. The discussion above shows that the concept of an agent is too plastic and not robust enough. And I think the same can probably be said of terms like "intelligent" and "autonomous".

Instead I would like to add my support to the position, common among social scientists in CSCW (e.g. Norman, 1991), that we should start from the patterns and requirements of cooperative work, and then move on to discuss appropriate computer support -- including perhaps the use of interface agents. In the next sections, I start from groups, their task domains, and their stages and functions in a project. I hope that these will prove to be more robust concepts, and that they will help to clarify where the use of AUAs might be applicable (though see Schmidt and Bannon, 1992, for a critique that suggests that even the concept of a group may not be clearly bounded in organizational settings).

CSCW Task Domains

Firstly it helps to be clear about the sorts of task domain in CSCW. I propose a basic threefold classification, which is not a set of discrete categories, but more akin to three primary colours making up a full spectrum when mixed in various proportions. This is shown in Table 1.

| Applications | A person or group of people approach the system as a tool to achieve a particular goal in a task domain with which they are already familiar. They already know what type of output they want, and they use the system as a means of achieving this. |

| Communications medium/environment | A person or group of people approach the system as a means of sending, moderating, editing or receiving information, to, with and from other people. They may or may not know exactly what output they want, but they know that the means of achieving it is primarily through relationships and interactions with other people, rather than with the system per se. |

| Exploratory learning environment | A person or group of people approach the system with a limited knowledge of a particular topic or task domain. They do not have a specific output in mind from the interaction. |

TABLE 1 — CSCW Task Domains

These definitions inevitably blur into one another a bit. There may be family relationships between instances of each classification, but the family trees are also interlinked. Systems for real-time process control, shared information storage, and so on, can be seen as related to one or more of the families.

One particular thing to bear in mind about the classification is how the distinction between design and use cuts across it. For example, a system could be designed as an application to meet specific requirements, but could admit possibilities of use in other ways, as a communications medium or an exploratory environment (cf. the "task-artifact" framework — Carroll et al, 1991; see also Nardi and Miller, 1991, for an account of how spreadsheets can mediate collaborative work). So the classifications are clearly not mutually exclusive as far as use goes, and may not be for design either.

How does this classification help us think about agent-based interfaces in CSCW? Laurel (1990) describes interface agents as software entities which act on behalf of the user. They should display the features of:

- responsiveness,

- competence, and

- accessibility.

(Note that the features of acting on behalf of the user and being responsive would seem to imply a relative lack of autonomy — I return to this point further on.)

She further identifies the types of task that an agent might be suited to carrying out as:

- information — for example, to aid navigation and browsing, information retrieval, sorting and organizing, filtering;

- learning — giving help, coaching, tutoring;

- work — reminding, advising, programming, scheduling; and

- entertainment — playing with or against, performing.

It is important here to reiterate the distinction between Laurel's interface agents and other types of software agent. An interface agent is visible to users as an "independent" entity, and may engage them in dialogue or appear to manipulate the interface itself. The representation of the interface agent may or may not be driven by a software agent. Other types of software agent need not be visible to users (though of course users may still attribute the actions performed by such agents to the system's agency).

On the basis of Laurel's work, I suggest that interface agents are probably most suited for systems intended to be used primarily as exploratory learning environments. This is the situation where information and learning are likely to be most useful. And entertainment is a good way of seducing users into further exploration. Examples of effective uses of interface agents in learning environments are given by Oren et al (1990) — a system that offers tutorials on various perspectives on American history — and by Adelson (1992) — a French language-learning application.

However, I would take issue with the use of interface agents for reminding and advising users in the course of their work in some contexts. In the case of applications, where in my definition users are already familiar with the task domain in which they are operating, the use of agents who might appear to break into the flow of a task is questionable. This approach may be helpful when the user is about to take an irreversible step (e.g. quitting without saving, deleting a file). It can also be used when users' tasks can be relied on to follow a simple, invariant sequence. But such circumstances are rare, for, as Suchman (1987) showed, users' plans may be subject to constant revision, even for apparently simple tasks. So it may not be helpful to have an agent try to "second guess" a user, because more often than not the user will want to override, or at least check, the agent's decisions and actions. And this problem will increase dramatically if there are more than a small number of agents.

This reveals what I think is a fundamental tension in Human-Computer Interaction and CSCW. On the one hand there is the aim of subjugating the system to users' will and their goals. On the other there is the aim of giving the system (or its agents) the intelligence to be able to anticipate users' goals and behaviour, and the autonomy to act on this (see Jennings, 1991, for a fuller discussion of this tension). The optimum trade-off point between these conflicting aims will depend on the specifics of local context.

For communications media/environments the use of interface agents would on the face of it seem even less desirable than for applications. The common view is that communication should be as im-media-te as possible, with the features of face-to-face communication being the asymptote towards which development and progress should lead. Working from this assumption, the use of an agent (software or human) to mediate communication should be avoided wherever possible.

Actually I would argue, along with Hollan and Stornetta (1992), that even the face-to-face scenario is a medium of sorts, with particular features which mean that it is not best suited to all types of communication and cooperation. There are a number of ways in which (human) agents mediate interaction between people. Let us consider a few of these:

- policing or security agent;

- moderator or chairperson;

- gatekeeper, secretary or personal assistant;

- expert consultant;

- facilitator.

Where there are clear security rules that have been decided organizationally or politically, these could possibly be embedded in an interface agent. But in the case of a group chairperson the skills required are much fuzzier, making it less desirable to put an AUA in such a position of power where the users of the system are subjugated to it. Systems should entrust floor control to informal social regulation wherever possible, and we should minimize the degree to which these systems force particular styles of coordination.

The gatekeeper role is traditionally seen as being of relatively low status, but this often masks the power associated with such positions. This power is by its nature informal: its rules are nowhere clearly specified. Wherever a system exerts any power of its users, the legitimacy and source of that power must be subject to careful scrutiny. I suggest that in cases where a system's power is not clearly an embodiment of agreed statutes, it will be more socially acceptable for that power to be meted out by human agents. This is on the grounds that they can be held more directly accountable.

But can we imagine instances where an interface agent had little or no direct power over its cooperating users? Perhaps an interface agent could be part of an on-line discussion team, which could call on it (as a responsive, competent and accessible member) to help with a particular issue. One way to ensure the acceptability of such an agent would be to make its actions very simple and transparent.

My own favourite "agent" is a sort of personal facilitator for tackling creative dilemmas. It is based on a simple pack of cards, so it is not very "intelligent". Brian Eno and Peter Schmidt developed 125 "Oblique Strategies", a set of lateral thinking prompts designed to help unfreeze the creative process, each of which is written on a card note 3. You pick a card at random and then you adopt the strategy written on it. Why not apply this sort of facilitating principle to group dynamics? One of the groups that I participate in has experimented with de Bono's (1985) "Thinking Hats" approach, wherein six notional hats, each with a colour assigned to it, represent six interactional roles or strategies. For example: one role is a sort of "devil's advocate"; one gives emotive, gut reactions (positive or negative), while another is charged with seeding new ideas or growth. When group members put on one of the hats, they accept a discipline in the type of contribution they will make to the ensuing discussion. But they have first collectively assented to this discipline. Perhaps an AUA could (randomly?) assign hats to group members using a CSCW system? In some group contexts, this could, I think, represent an intelligent usage of a relatively unintelligent technology.

Group Stages and Group Functions

McGrath's (1990) framework of the stages and functions of group projects is useful for highlighting the factors which technologies to support groupwork must take into account. McGrath suggests that a group's project activity can be divided into up to four stages.

| Stage I | Inception and acceptance of a project (goal choice). |

| Stage II | Solution of technical issues (means choice). |

| Stage III | Resolution of conflict, political issues (policy issues). |

| Stage IV | Execution of performance requirements of project (goal attainment). |

While Stages I and IV are by definition necessary parts of any successful group project, Stages II and III may be bypassed in some (simple) cases. The proportion of resources taken by each Stage may thus vary considerably.

McGrath elaborates his framework by classifying three types of function carried out within a group. As well as the production function, which contributes directly to the project goal, there is a member-support function, which is the group's contribution to its component parts, and a well-being function, which is the group's contribution to its own system viability. These functions are clarified further in Table 2.

| Stages | Group Contribution Functions | ||

| Production | Member support | Group well-being | |

| Inception (Goal Choices) |

Production Opportunity Demand |

Inclusion Opportunity Demand |

Interaction Opportunity Demand |

| Problem solving (Means Choices) |

Technical Problem Solving |

Position Status Attainments |

Role Net Definition |

| Conflict resolution (Political Choices) |

Policy Conflict Resolution |

Payoff Allocation |

Power Distribution |

| Execution (Goal Attainment) |

Performance | Participation | Interaction |

TABLE 2 — Group Stages and Functions (reproduced from McGrath, 1990, p.30)

How can we use this framework to ascertain what sort of computer support would be useful for group project activities? If we take the contents of each cell in the table, can we establish where the use of AUAs would be (a) possible and (b) desirable?

First, I make the human-centred assumption that the group comprises only human members; AUAs would not count as group members note 4. From this assumption there appears to be minimal opportunity for AUAs to support any of the member-support functions in the middle column, as these seem to be essentially social in nature.

At the Inception stage, computer support clearly has a contribution to make. More potential stakeholders or group members can be given the opportunity to interact in making the goal choice. But in many cases, electronic mail or conferencing systems will provide adequate support to this process; and it is not clear what added value AUAs could provide in this area.

Moving to the second row of the table, the Thinking Hats example discussed earlier provides an example of a particularly self-conscious form of Role net definition. This sort of strategy could be usefully deployed for technical problem-solving as well. Other types of computer support for this stage would include shared databases, possibly with some intelligence built into the front-end, and computerized domain expertise, which could take either tool-based or agent-based form.The Conflict resolution stage is more problematic, particularly when we take into account what I have already argued about the "interference" of systems in power relations. Such interference is normally perceived when the system reifies a power relationship which, in the normal flow of human discourse, would be subject to constant renegotiation. It could be argued against this, that AUAs could be used to facilitate the process of group interaction leading to political choices. But anyone who has tried to facilitate a debate of a hot political issue would tell you that, even with the most neutral of intentions and strategies, it is very hard to convince opposing factions that you are being impartial. The ease with which any "losing" party could use the system as a scapegoat for reaching the "wrong" decision could outweigh any advantage from the system being perceived initially as neutral.

In fact the situation is complicated by the possibility (not acknowledged in the table) that the distinction between technical and policy choices may sometimes be blurred and may itself be negotiated within the group. One of the best ways to win policy arguments is to present the issue as a technical one for which you possess the relevant expertise (consider the example of this chapter, which aims to construe social science as the appropriate discipline to tackle questions about AUAs!).

Finally, at the Execution stage, groupware applications and communication media could be useful both for task performance and for supporting interaction between group members.

What this discussion shows is that, among the group activities which computers could support, there are few if any which intrinsically call out for AUAs as a design solution. That is not to say that AUAs will not be a part of some useful CSCW designs in particular contexts (though I have indicated that there are some areas of group activity where the use of AUAs might be contentious or deleterious). What it does mean is that to focus on AUAs as an interface style to drive CSCW design would be to put the cart in front of the horse.

Conclusions

Let me summarise my key points.

- There is a distinction between software agents and interface agents — it is possible to have one without the other.

- Users' attribution of agent qualities to any type of technology is affected by many factors, not just by designers' intentions.

- The use of agent-based interfaces is primarily a choice of style rather than function.

- The agent-based style may be most appropriate for attracting and interesting users in exploratory learning environments, and possibly for supporting innovative approaches to group dynamics.

- Intelligent user agents per se are not a good starting point for cross-fertilization of ideas between social and computer scientists, or for CSCW design decisions.

- Instead we should start from task domains, group project activities and group circumstances, and leave decisions about interface agents to later in the design process.

- There is a broad range of group project activities, and computer support should be directed to only those activities where it is appropriate and non-controversial.

To conclude, I will answer the questions which I quoted at the head of this chapter in an admittedly glib, but hopefully provocative manner.

How intelligent will... agents be?

I am tempted to say that their intelligence will be inversely proportional to their designers'. That is,

a good designer would produce a design of sufficient elegance that no one element in it would need to be

programmed with complex rules. However, artificial intelligence may be the best design solution when a

system has to deal with a wide range of needs and uses. Also, because such questions are often affected

by issues of standardization and the like, it is partly the intelligence of the design community as a whole

that will decide the matter.

How will they be displayed?

Generally they won't be — except in some systems designed to provide learning environments. Intelligent

agents may represent an effective basis for the architecture of DAI systems (I do not have the expertise

to comment on this), but, largely, users want tools that they can use, not agents that appear to have

ambitions to use them.

How will they support group working?

Software agents may support a range of simple operations which are naturally routine, or which the user

community has previously agreed to routinize. Interface agents will be less common. They may support group

performance activities and some aspects of technical problem solving. They may be used to draw group members

into exploring learning environments. They could possibly be used to support lateral thinking and creativity

in novel forms of group interaction. Computer support could also be useful as a means of making the group's

progress towards its goals visible to all members, but it is unclear whether agents would be necessary or

appropriate for this.

A case example

I believe that my position as outlined above is consistent with a consideration of the role of agents in

the Information Lens and Object Lens systems (Crowston and Malone, 1988). These represent one of the best

established approaches to software agents in CSCW. In the Information Lens, semi-structured messages are

analyzed in terms of "frames" — again a well-established AI concept that requires less sophistication

than some more recent AI ideas. The agent that performs this analysis is not displayed on the system's

user interface. The design is elegant (i.e. quite simple), but nevertheless requires that users agree

to adopt common frameworks for their messages if its full power is to be used. Its most common uses are

as an aid to performance and problem solving tasks like sifting large amounts of information (which is

in fact essentially a single-user application) and scheduling meetings.

Acknowledgements

I would like to thank my colleague Ian Franklin for contributing ideas which helped develop this chapter.

The Oblique Strategy I used in drafting the original version was "Use fewer notes" note 5.

Notes

1. However, the definition of an agent can apparently sometimes be unclear even to software designers. When a computer scientist was asked, at the end of presenting a paper to a recent CSCW conference, why he used the term "agents" to refer to parts of his architecture, he replied that, "'agents' sounds sexier — they are autonomous processes"!

2. The advert was for IRMA™ Workstation for Windows from Digital Communications Associates, in PC Magazine, 1991.

3. Oblique Strategies were originally devised by Brian Eno and Peter Schmidt in 1975. Some examples of the strategies are: "Retrace your steps", "Remove specifics and convert to ambiguities", "Honour thy error as a hidden intention", and "Cascades".

4. Woolgar (1985) demonstrates the possibility of questioning this assumption, so we should be clear that the assumption is backed up by a value judgment (that is, that it is not desirable to give the technology the same status as its users).

5. The strategy I used in completing the final draft was "The inconsistency principle".

References

Adelson, B., (1992), Evocative Agents and Multi-Media Interface Design. In Proceedings of CHI '92. Association of Computing Machinery, New York, 351–356.

Bateman, J., (1985), The Role of Language in the Maintenance of Intersubjectivity: A Computational Approach. In Gilbert, G.N., and Heath, C., editors, Social Action and Artificial Intelligence. Gower, Aldershot, 40–81.

de Bono, E., (1985), Six Thinking Hats. Penguin, Harmondsworth.

Carroll, J.M., Kellogg, W.A., and Rosson, M.B. (1991), The Task-Artifact Cycle. In Carroll, J.M., editor, Designing Interaction: Psychology at the Human-Computer Interface. Cambridge University Press, Cambridge.

Crowston, K., and Malone, T.W., (1988), Intelligent Software Agents. Byte, December 1988, 267–271.

Hollan, J., and Stornetta, S., (1992), Beyond Being There. In Proceedings of CHI '92. Association of Computing Machinery, New York, 119–125.

Jennings, D., (1991), Subjectivity and Intersubjectivity in Human-Computer Interaction. PAVIC Publications, Sheffield Hallam University, Sheffield.

Laurel, B., (1990), Interface Agents: Metaphors with Character. In Laurel, B., editor, The Art of Human-Computer Interface Design. Addison-Wesley, Reading, MA.

McGrath, J.E., (1990), Time Matters in Groups. In Galegher, J., Kraut, R.E., and Egido, C., editors, Intellectual Teamwork: Social and Technological Foundations of Cooperative Work. Lawrence Erlbaum, Hillsdale, NJ.

Nardi, B.A., and Miller, J.R., (1991), Twinkling Lights and nested Loops: Distibuted Problem Solving and Spreadsheet Development. International Journal of Man-Machine Studies, Vol 34, 161–184.

Norman, D.A., (1988), The Design of Everyday Things. Basic Books, New York.

Norman, D.A., (1991), Collaborative Computing: Collaboration First, Computing Second. Communications of the ACM, Vol 34, No 12, 88–90.

Oren, T., Salomon, G., Kreitman, K., and Don, A., (1990), Guides: Characterizing the Interface. In Laurel, B., editor, The Art of Human-Computer Interface Design. Addison-Wesley, Reading, MA.

Schmidt, K., and Bannon, L., (1992), Taking CSCW Seriously: Supporting Articulation Work. Computer Supported Cooperative Work, Vol 1, Nos 1–2, 7–40.

Star, S.L., (1989), The Structure of Ill-Structured Solutions: Boundary Objects and Heterogeneous Distributed Problem Solving. In Gasser, L., and Hughes, M.N., editors, Distributed Artificial Intelligence: Volume 2. Pitman, London.

Storrs, G., (1989), A Conceptual Model of Human-Computer Interaction? Behaviour and Information Technology, Vol 8, 323–334.

Suchman, L.A., (1987), Plans and Situated Actions: The Problem of Human-Machine Communication. Cambridge University Press, Cambridge.

Woolgar, S., (1985), Why not a Sociology of Machines? The Case of Sociology and Artificial Intelligence. Sociology, Vol 19, 557–572.

Woolgar, S., (1989), Representation, Cognition, Self. In Fuller, S., de Mey, M., and Woolgar, S., editors, The Cognitive Turn: Sociological and Psychological Perspectives in the Study of Science. Kluwer, Dordrecht.

Subscribe to my RSS feed, which covers this blog, my book blog, and further commentary on other web resources (more feeds below)

Recommended: RSS feed that combines items on this site, my book blog, and commentary on other web resources

RSS feed for this site only

RSS feed for my book, Net, Blogs and Rock'n'Roll

RSS feed for shared bookmarks

My latest bookmarks (click 'read more' for commentary):

My archived bookmarks (02004-02008)

On most social sites I am either 'davidjennings' or 'djalchemi', for example: Flickr, Last.fm, Ma.gnolia and so on…

W3C Standards

Check whether this page is valid XHTML 1.0

Check whether the CSS (style sheet) is valid